Tuchsure is a multisensory interaction system designed for visually impaired users to help them perceive risks they cannot see. By integrating object recognition, edge inference, audio feedback, and tactile vibration, the system provides an intuitive, trust-building experience that reduces anxiety and increases safety and autonomy in daily life.

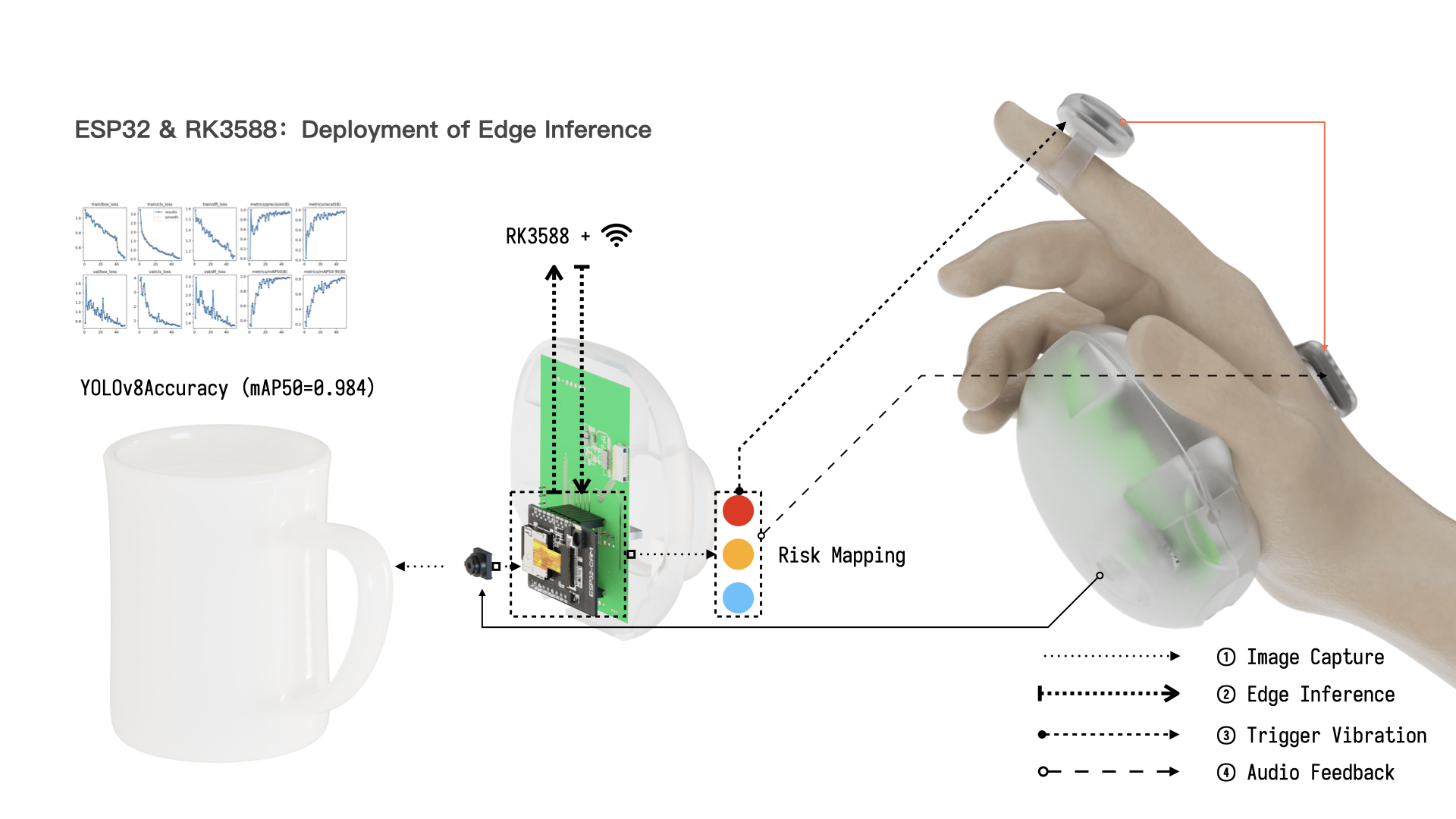

The device captures environmental images through an embedded camera, processes object-level risk using an RK3588 edge-computing module, and communicates risk levels through differentiated vibration and audio cues.

Visually impaired users do not only lose access to visual information—they lose access to risk-awareness. Traditional assistive tools help identify obstacles, but fail to interpret object-level danger (e.g., scissors vs. cup).

Tuchsure aims to restore a sense of security through weak-perception safety design: combining multimodal sensory cues to form reliable, human-centered risk awareness.

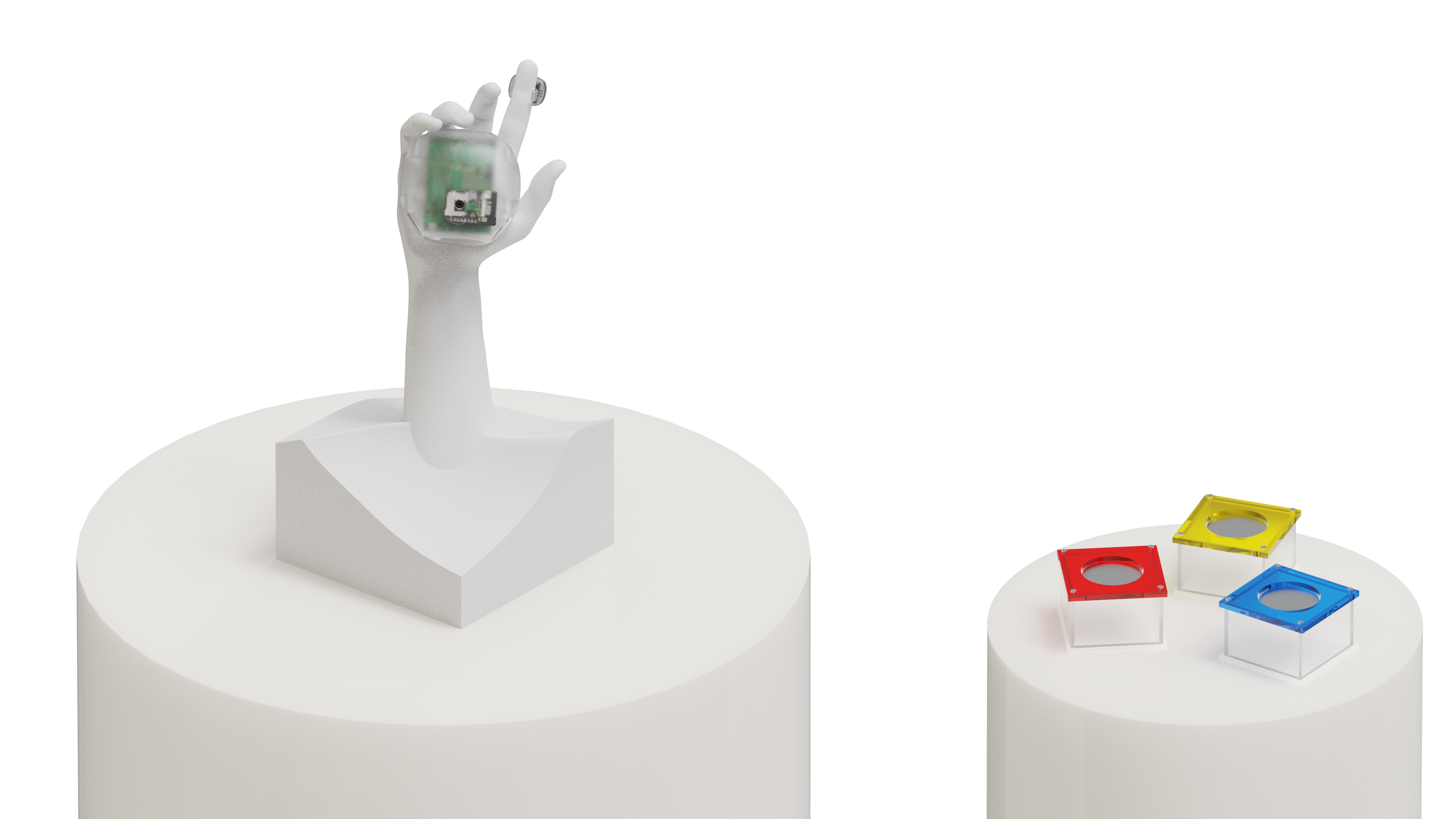

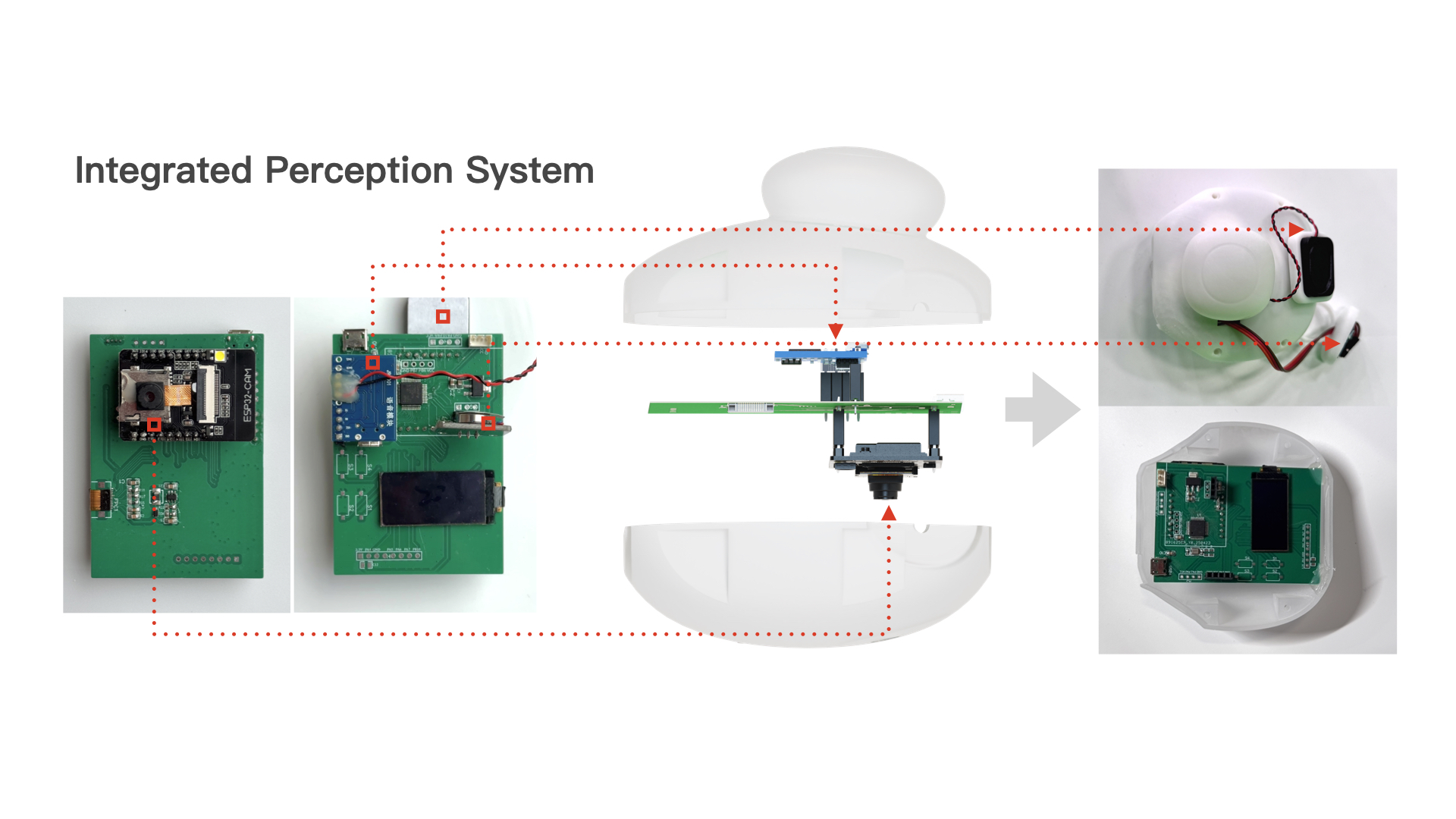

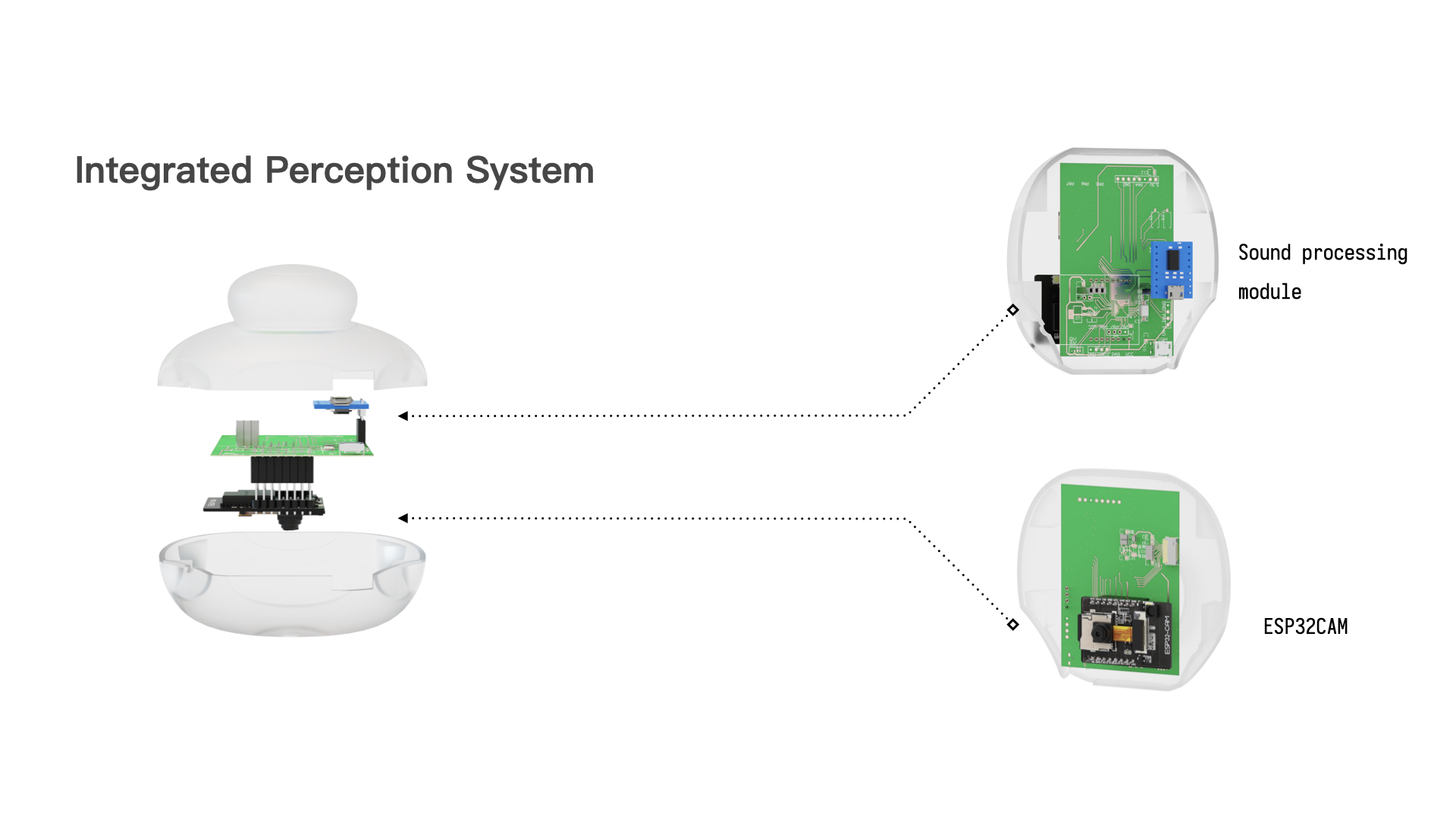

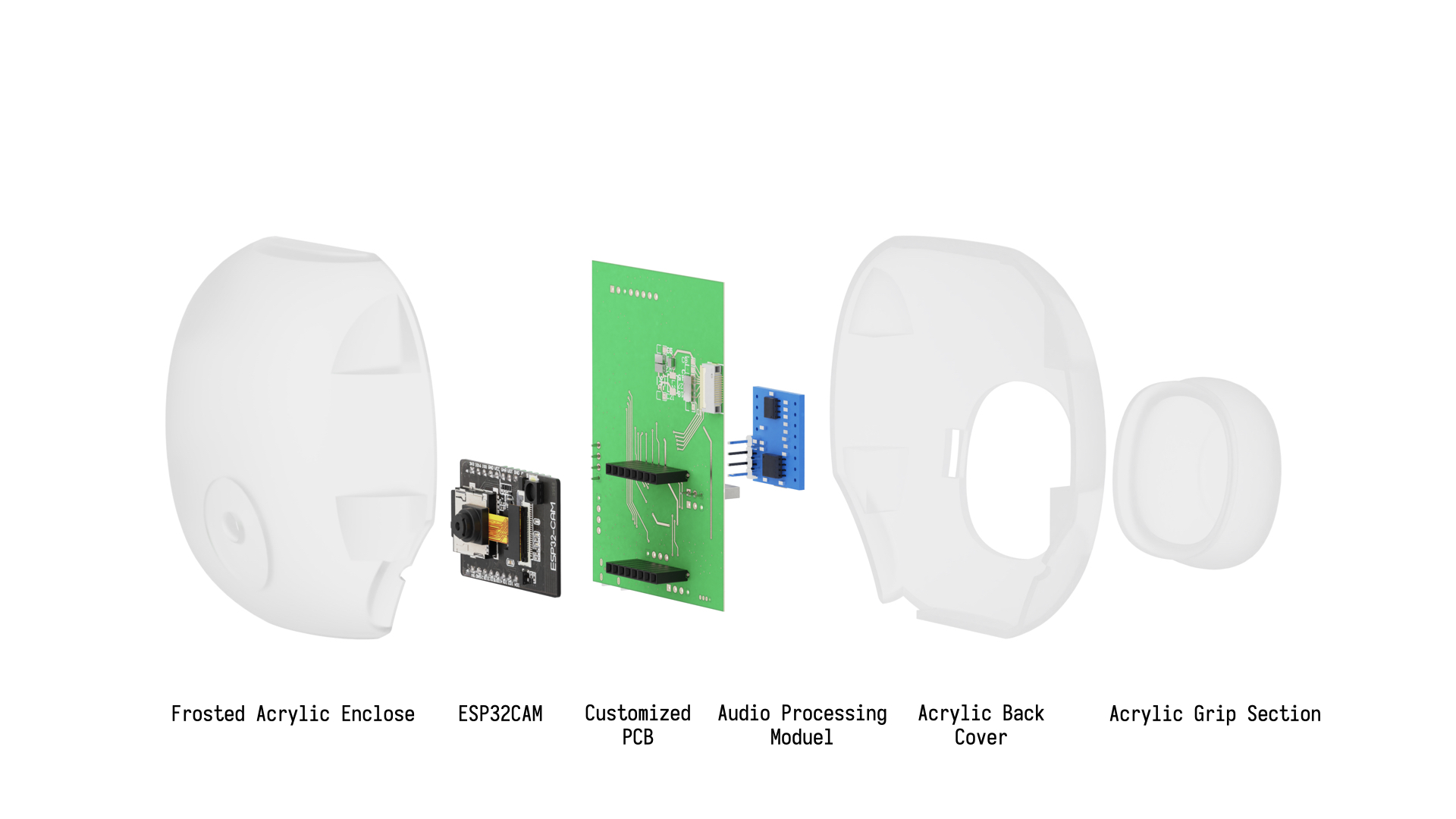

The system is built around an integrated perception module:

- ESP32-CAM for image capture

- RK3588 for YOLOv8 risk inference (mAP50 = 0.984)

- Customized PCB

- Vibration motor (finger-mounted)

- Audio notification module (palm-mounted)

- Frosted acrylic enclosure & ergonomic grip

Pipeline:

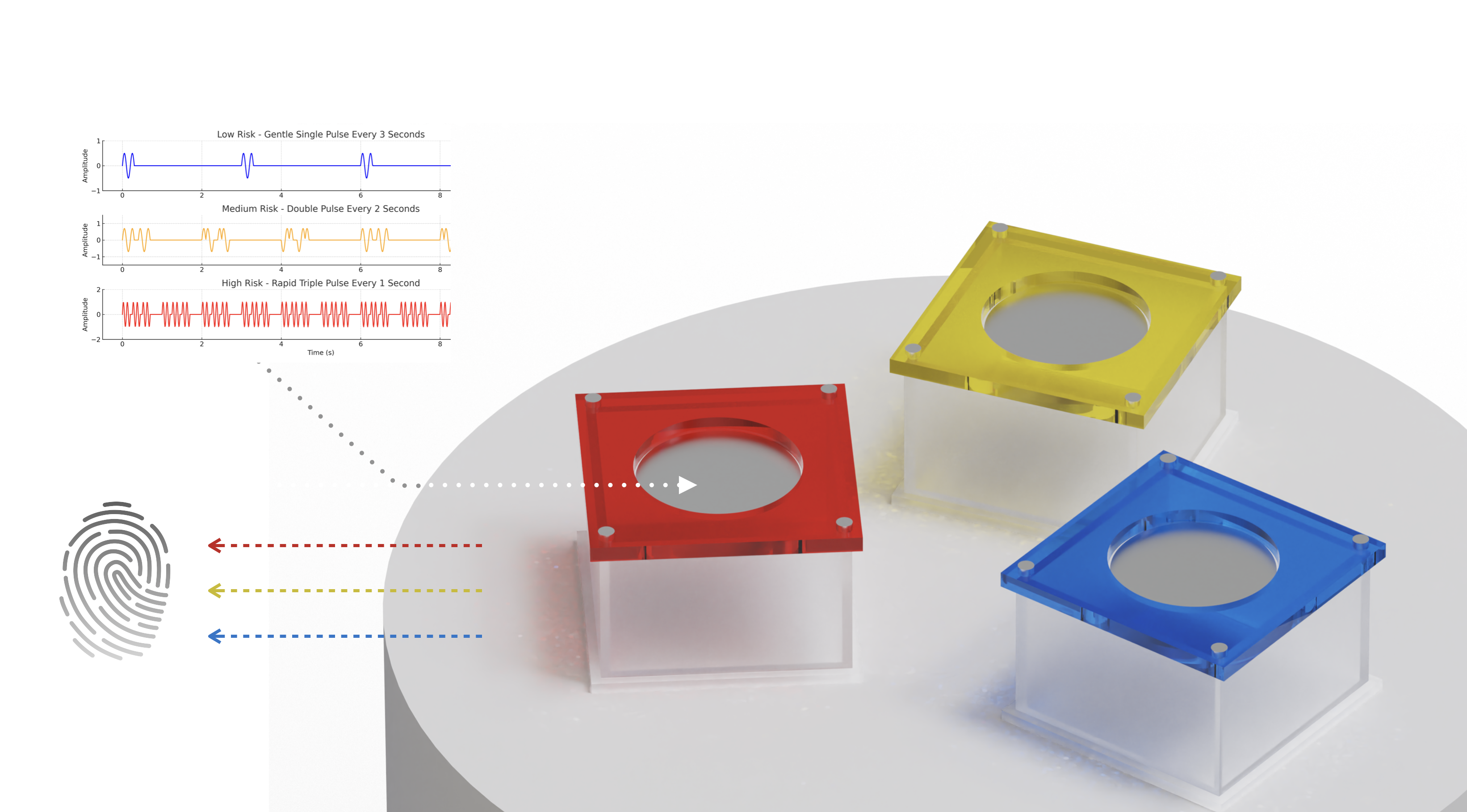

1) Image Capture → 2) Edge Inference → 3) Risk Mapping → 4) Vibration + Audio Feedback

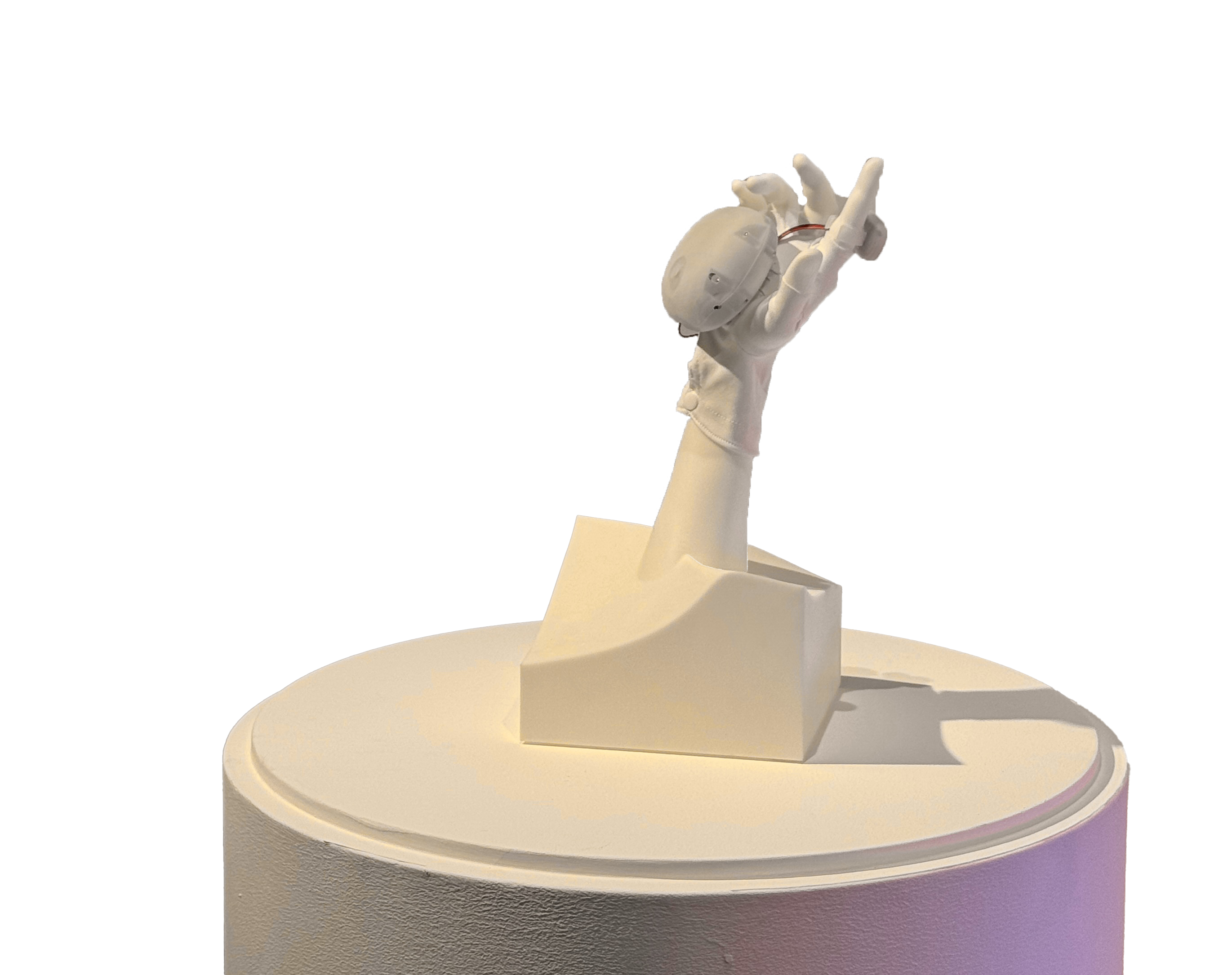

The device is designed around three intuitive actions:

- Hold (form-follows-finger ergonomics)

- Feel (localized vibration on fingertip—highest neural density)

- Listen (fast auditory recognition of object category)

This multimodal feedback aligns with Baddeley’s working memory model, enhancing rapid recall and situational safety.

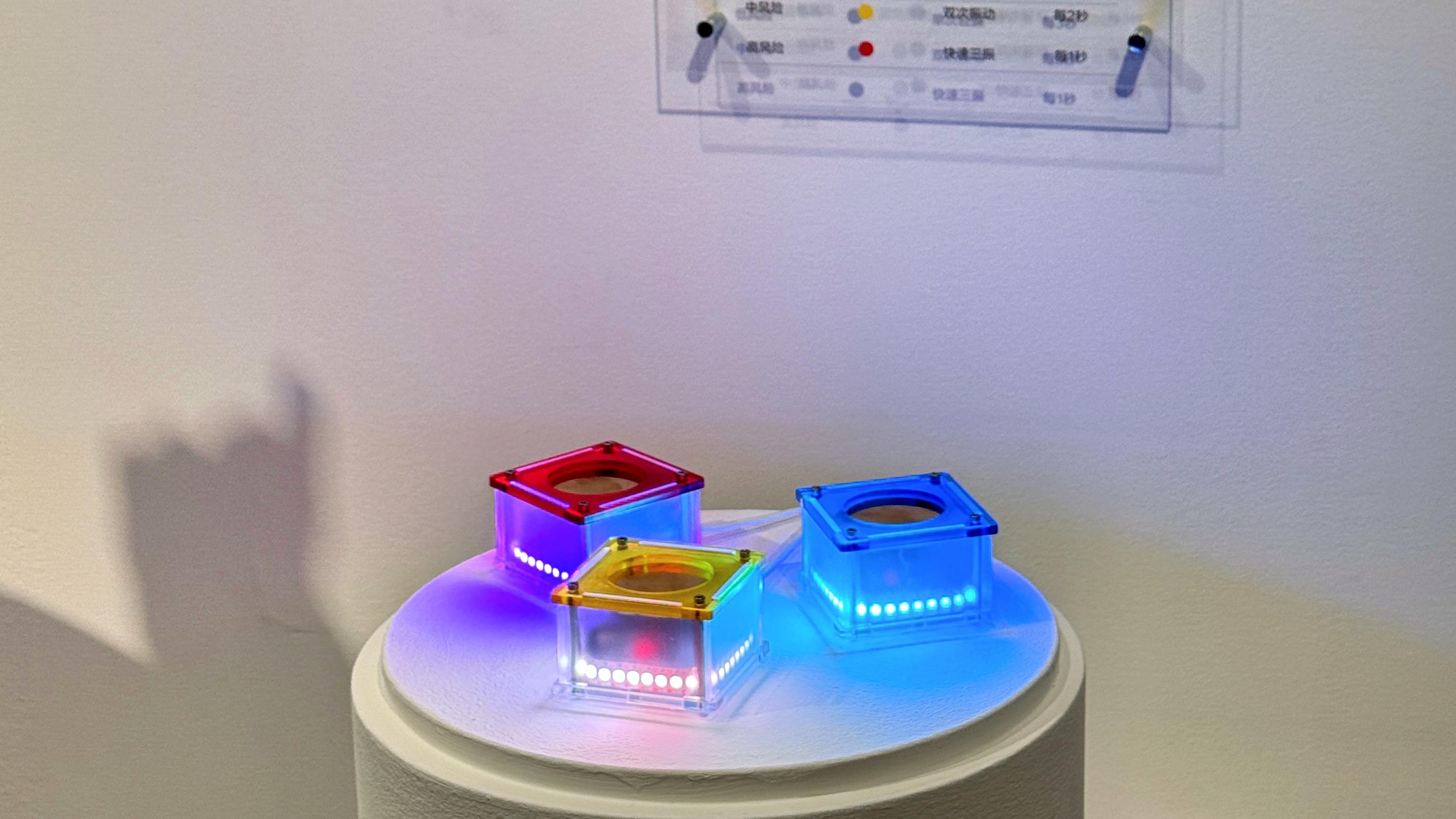

Three vibration modes communicate graded risks:

Audio provides semantic confirmation, while vibration offers spatial, fast-response cues.

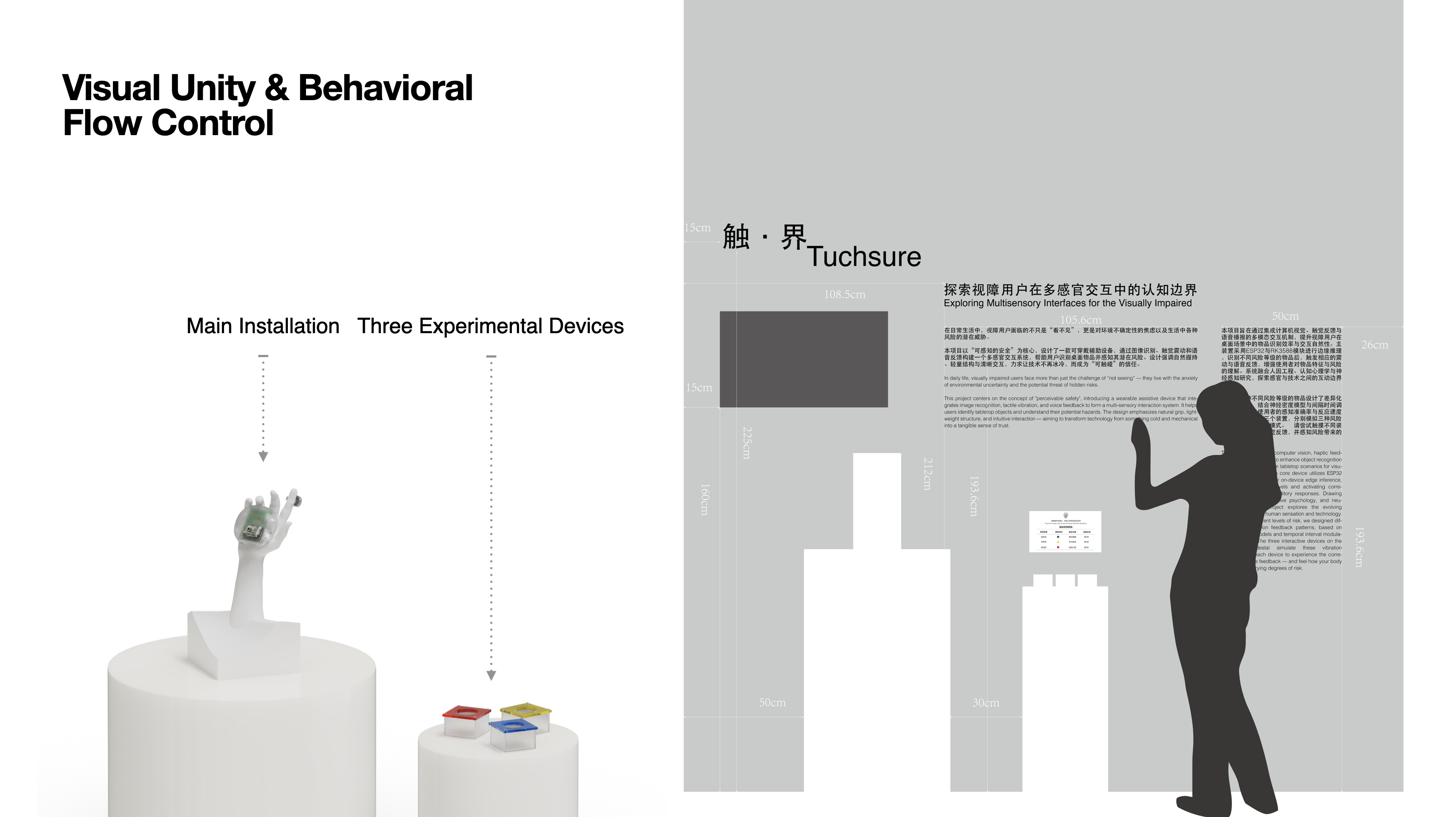

The exhibition includes:

- Main installation: demonstration of the wearable device

- Three experimental devices: tactile coding test stations

- Visual panels & demonstration video

- Interactive flow guiding visitors through recognition → vibration → interpretation

This creates a coherent narrative around risk perception and multisensory interaction.

How can multimodal interaction empower visually impaired users to perceive hidden risks in daily life?

Hi this is a test, Tuchsure is a multisensory interaction system designed for visually impaired users to help them perceive risks they cannot see. By integrating object recognition, edge inference, audio feedback, and tactile vibration, the system provides an intuitive, trust-building experience that reduces anxiety and increases safety and autonomy in daily life.